Note

사이킷런의

model_selection모듈은 학습 데이터와 테스트 데이터 세트를 분리하거나 교차 검증 분할 및 평가, 그리고Estimator의 하이퍼 파라미터를 튜닝하기 위한 다양한 함수와 클래스를 제공

1. 학습/테스트 데이터 세트 분리 - train_test_split()

학습 데이터 세트로만 학습하고 예측하면 무엇이 문제일까?

- 다음 예제는 학습과 예측을 동일한 데이터 세트로 수행한 결과

from sklearn.datasets import load_iris

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

iris = load_iris()

dt_clf = DecisionTreeClassifier()

train_data = iris.data

train_label = iris.target

dt_clf.fit(train_data, train_label)

# 학습 데이터 셋으로 예측 수행

pred = dt_clf.predict(train_data)

print('예측 정확도:',accuracy_score(train_label,pred))

>>> 예측 정확도: 1.0- 예측 정확도가 1.0이라는 뜻은 정확도가 100%

- 즉, 문제의 정답을 알고 있는 상태에서 같은 문제를 테스트 한 것!

- 따라서, 예측을 수행하는 데이터 세트는 학습을 수행한 학습용 데이터 세트가 아닌 전용의 테스트 데이터 세트여야 함

사이킷런의

train_test_split()

- 원본 데이터 세트에서 학습 및 테스트 데이터 세트를 쉽게 분리 가능

train_test_split()는 첫 번째 파라미터로 feature 데이터 세트, 두 번째 파라미터로 label 데이터 세트를 입력받고, 선택적으로 다음 파라미터를 입력 받음

test_size: 전체 데이터에서 test 데이터 세트 크기를 얼마로 샘플링할 것인가를 결정 (default : 0.25, 즉 25%)train_size: 전체 데이터에서 train 데이터 세트 크기를 얼마로 샘플링할 것인가를 결정 (test_sizeparameter를 통상적으로 사용하기 때문에train_size는 잘 사용되지 않음)shuffle: 데이터를 분리하기 전에 데이터를 미리 섞을지를 결정 (default : True), 데이터를 분산시켜서 좀 더 효율적인 학습 및 테스트 데이터 세트를 만드는 데 사용random_state: 호출할 때마다 동일한 학습/테스트용 데이터 세트를 생성하기 위해 주어지는 난수 값 (train_test_split()는 호출 시 무작위로 데이터를 분리하므로random_state를 지정하지 않으면 수행할 때마다 다른 학습/테스트 용 데이터를 생성)train_test_split()의 반환값은 tuple 형태로, 순차적으로 train-feature, test-feature, train-label, test-label 데이터 세트 반환

- 붓꽃 데이터 세트를

train_test_split()을 이용해 test 데이터 세트를 전체의 30%, train 데이터 세트를 70%로 분리

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

dt_clf = DecisionTreeClassifier( )

iris_data = load_iris()

X_train, X_test, y_train, y_test = train_test_split(iris_data.data,iris_data.target,

test_size=0.3, random_state=121)- train 데이터를 기반으로

DecisionTreeClassfier를 학습하고 예측 정확도 측정

dt_clf.fit(X_train, y_train)

pred = dt_clf.predict(X_test)

print('예측 정확도: {0:.4f}'.format(accuracy_score(y_test,pred)))

>>> 예측 정확도: 0.9556붓꽃 데이터는 150개의 데이터로 데이터의 양이 크지 않아 전체의 30% 정도인 테스트 데이터는 45개 정도밖에 되지 않으므로 알고리즘의 예측 성능을 판단하기에는 그리 적절하지 않음 → 학습을 위한 데이터의 양을 일정 수준 이상으로 보장하는 것도 중요하지만, 학습된 모델에 대해 다양한 데이터를 기반으로 예측 성능을 평가해 보는 것도 매우 중요!

2. 교차 검증 (Cross-Validation, CV)

과적합 (Overfitting)

모델이 학습 데이터에만 과도하게 최적화되어, 실제 예측을 다른 데이터로 수행할 경우에는 예측 성능이 과도하게 떨어지는 것

- 고정된 학습 데이터와 테스트 데이터로 평가를 하다 보면 테스트 데이터에만 최적의 성능을 발휘할 수 있도록 편향되게 모델을 유도하는 경향이 생길 수 있음

- 결국은 해당 테스트 데이터에만 과적합되는 학습 모델이 만들어져 다른 테스트용 데이터가 들어올 경우에는 성능이 저하됨 → 이러한 문제점을 개선하기 위해 교차 검증을 이용해 더 다양한 학습과 평가를 수행!

교차 검증 (Cross-Validation, CV)

주어진 데이터를 훈련 데이터와 검증 데이터로 나누어 모델의 일반화 성능을 평가하는 방법

- 데이터 편중을 막기 위해서 별도의 여러 세트로 구성된 학습 데이터 세트와 검증 데이터 세트에서 학습과 평가를 수행하는 것

- 각 세트에서 수행한 평가 결과에 따라 하이퍼 파라미터 튜닝 등의 모델 최적화를 더욱 손쉽게 할 수 있음

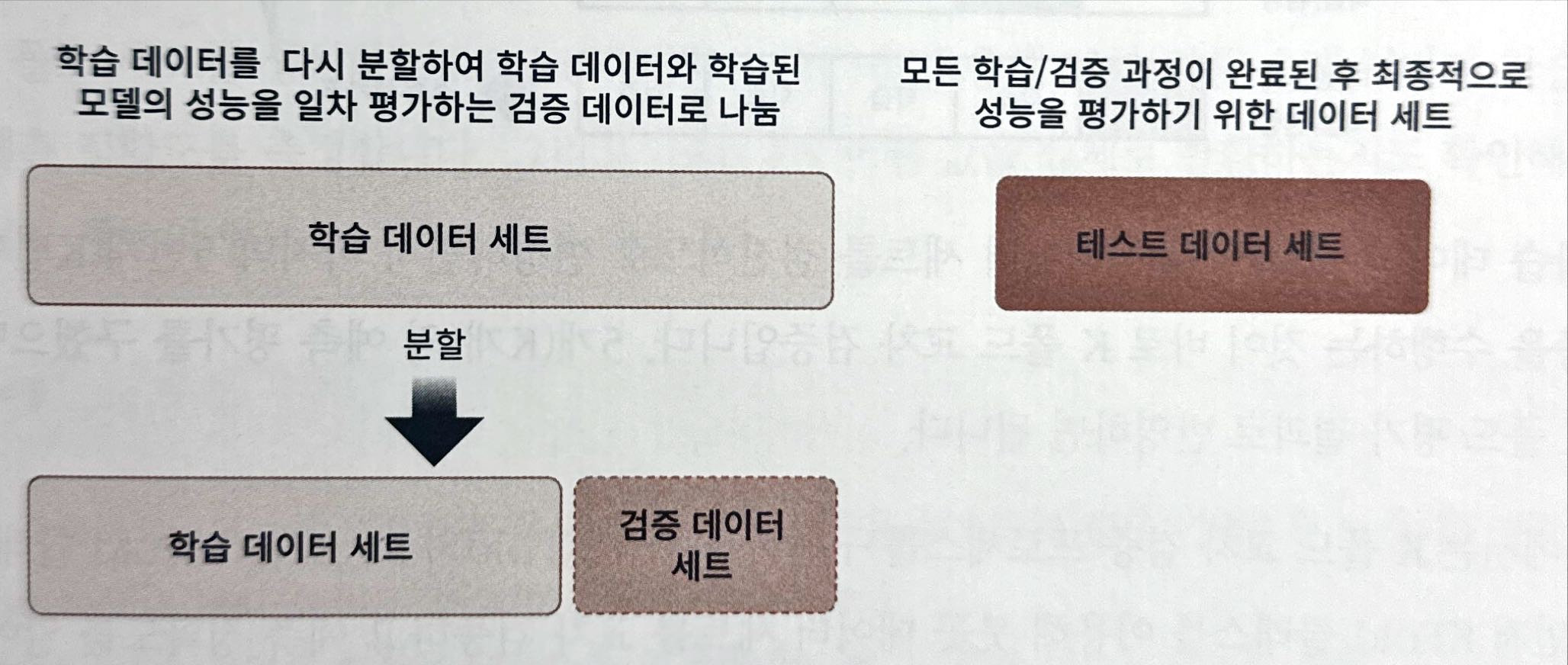

- 대부분의 ML 모델의 성능 평가는 교차 검증 기반으로 1차 평가를 한 뒤에 최종적으로 테스트 데이터 세트에 적용해 평가하는 프로세스

a. k 폴드 교차 검증

k 폴드 교차 검증 (K-Fold Cross-Validation)

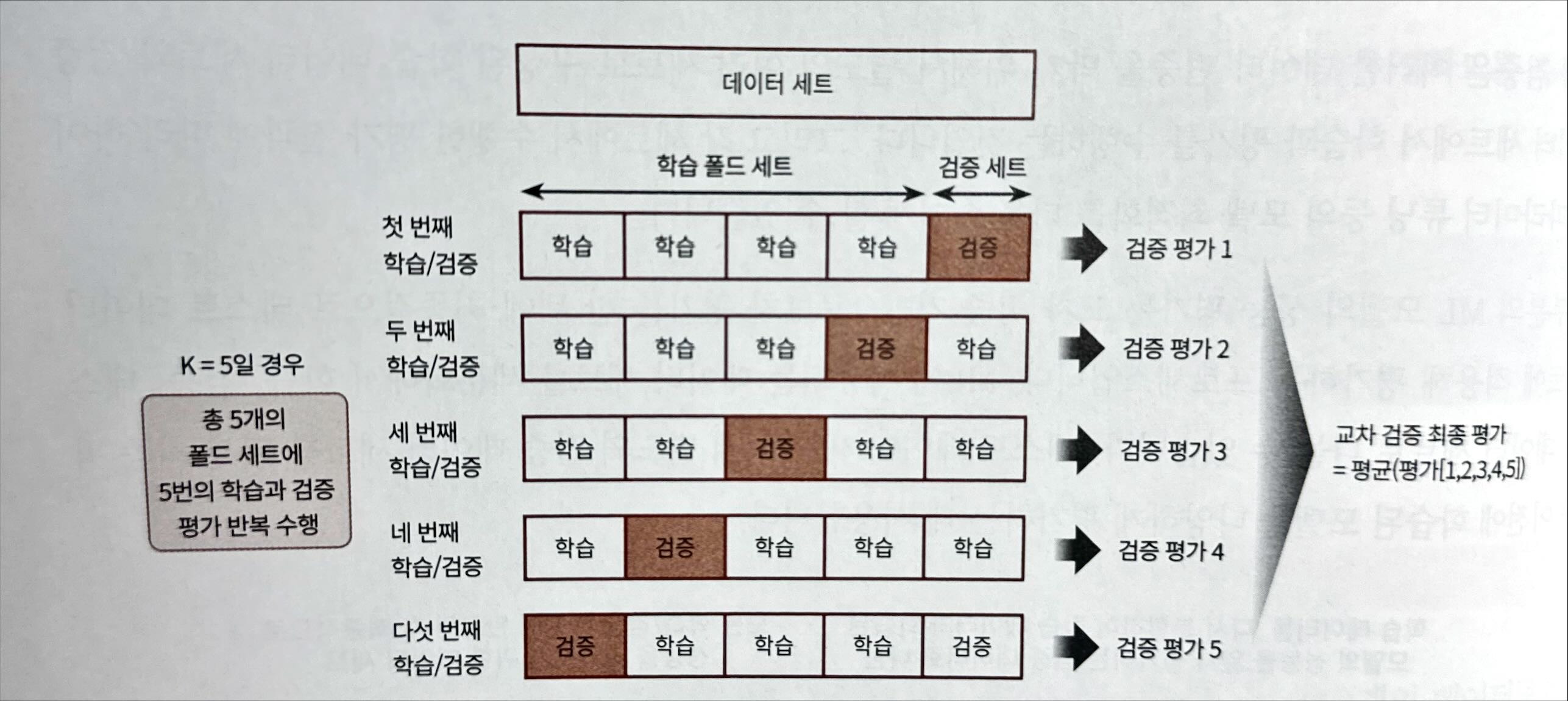

k개의 데이터 fold(조각) 세트를 만들어서 k번만큼 각 fold 세트에 학습과 검증 평가를 반복적으로 수행하는 방법

-

다음 그림은 5 fold 교차 검증 수행 (k=5)

- 5개의 fold된 데이터 세트를 학습과 검증을 위한 데이터 세트로 변경하면서 5번 평가를 수행한 뒤, 이 5개의 평가를 평균한 결과를 가지고 예측 성능을 평가

- 이렇게 학습 데이터 세트와 검증 데이터 세트를 점진적으로 변경하면서 마지막 5번째(k번째)까지 학습과 검증을 수행하는 것이 바로 k fold 교차 검증

-

사이킷런에서는 k fold 교차 검증 프로세스를 구현하기 위해

KFold와StratifiedKFold를 제공

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

from sklearn.model_selection import KFold

import numpy as np

iris = load_iris()

features = iris.data

label = iris.target

dt_clf = DecisionTreeClassifier(random_state=156)

# 5개의 폴드 세트로 분리하는 KFold 객체와 폴드 세트별 정확도를 담을 리스트 객체 생성.

kfold = KFold(n_splits=5)

cv_accuracy = []

print('붓꽃 데이터 세트 크기:',features.shape[0])

>>> 붓꽃 데이터 세트 크기: 150KFold(n_splits=5)로KFold객체를 생성했으니, 이제 생성된KFold객체의split()을 호출해 전체 붓꽃 데이터를 5개의 fold 데이터 세트로 분리

n_iter = 0

# KFold객체의 split( ) 호출하면 폴드 별 학습용, 검증용 테스트의 로우 인덱스를 array로 반환

for train_index, test_index in kfold.split(features):

# kfold.split( )으로 반환된 인덱스를 이용하여 학습용, 검증용 테스트 데이터 추출

X_train, X_test = features[train_index], features[test_index]

y_train, y_test = label[train_index], label[test_index]

#학습 및 예측

dt_clf.fit(X_train , y_train)

pred = dt_clf.predict(X_test)

n_iter += 1

# 반복 시 마다 정확도 측정

accuracy = np.round(accuracy_score(y_test,pred), 4)

train_size = X_train.shape[0]

test_size = X_test.shape[0]

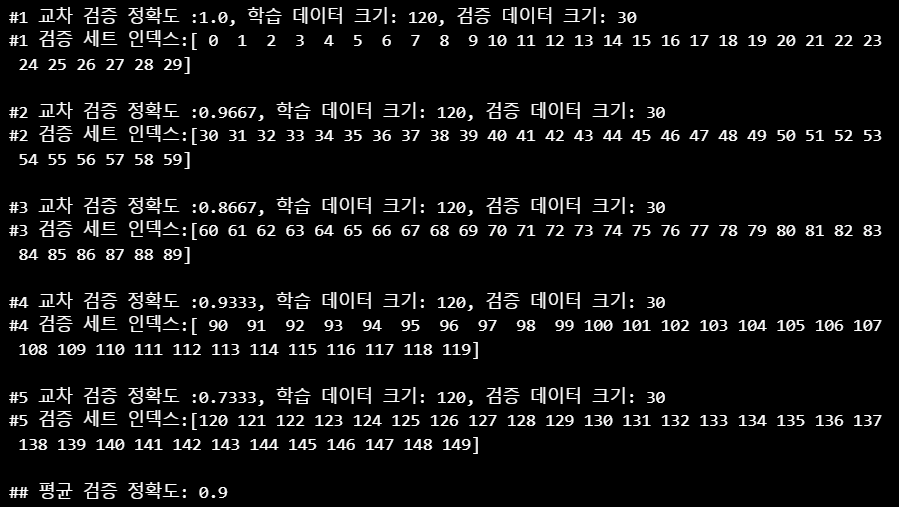

print('\n#{0} 교차 검증 정확도 :{1}, 학습 데이터 크기: {2}, 검증 데이터 크기: {3}'

.format(n_iter, accuracy, train_size, test_size))

print('#{0} 검증 세트 인덱스:{1}'.format(n_iter,test_index))

cv_accuracy.append(accuracy)

# 개별 iteration별 정확도를 합하여 평균 정확도 계산

print('\n## 평균 검증 정확도:', np.mean(cv_accuracy))

- 5번 교차 검증 결과 평균 검증 정확도는 0.9이고, 교차 검증 시마다 검증 세트의 인덱스가 달라짐을 알 수 있음!

b. Stratified K Fold 클래스

Stratified K Fold

불균형한(imbalanced) 분포도를 가진 label(결정 클래스) 데이터 집합을 위한 K fold 방식

- 불균형한 분포도를 가진 label 데이터 집합은 특정 label 값이 특이하게 많거나 매우 적어서 값의 분포가 한쪽으로 치우치는 것을 의미

K Fold가 label 데이터 집합이 원본 데이터 집합의 label 분포를 학습 및 테스트 세트에 제대로 분배하지 못하는 경우의 문제를 해결!

- 이를 위해 원본 데이터의 label 분포를 먼저 고려한 뒤 이 분포와 동일하게 학습과 검증 데이터 세트를 분배

대출 사기 데이터를 예측한다고 가정!

- 이 데이터 세트는 1억 건이고, 수십 개의 feature와 대출 사기 여부를 뜻하는 label(사기:1, 정상:0)로 구성되어 있음

- 그런데 대부분의 데이터는 정상 대출일 것!

- 대출 사기가 약 1000건이 있다고 한다면 전체의 0.0001%의 아주 작은 확률로 대출 사기 label이 존재

- 이렇게 된다면 K Fold로 랜덤하게 학습 및 테스트 세트의 인덱스를 고르더라고 label 값인 0과 1의 비율을 제대로 반영하지 못하는 경우가 쉽게 발생!

- 따라서 원본 데이터와 유사한 대출 사기 레이블 값의 분포를 학습/테스트 세트에도 유지하는 게 매우 중요!

Note

먼저 K Fold가 어떤 문제를 가지고 있는지 확인해 보고 이를 사이킷런의

StratifiedKFold클래스를 이용해 개선!

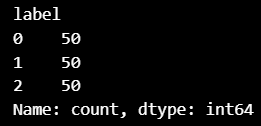

- 붓꽃 데이터 세트를 간단하게 DataFrame으로 생성하고 label 값의 분포도 확인

import pandas as pd

iris = load_iris()

iris_df = pd.DataFrame(data=iris.data, columns=iris.feature_names)

iris_df['label']=iris.target

iris_df['label'].value_counts()

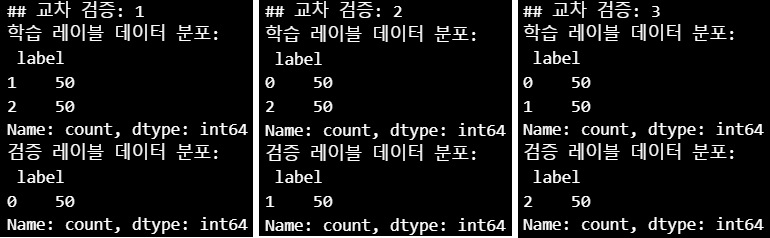

- 각 교차 검증 시마다 생성되는 학습/검증 label 데이터 값의 분포도 확인

kfold = KFold(n_splits=3)

# kfold.split(X)는 폴드 세트를 3번 반복할 때마다 달라지는 학습/테스트 용 데이터 로우 인덱스 번호 반환.

n_iter =0

for train_index, test_index in kfold.split(iris_df):

n_iter += 1

label_train= iris_df['label'].iloc[train_index]

label_test= iris_df['label'].iloc[test_index]

print('## 교차 검증: {0}'.format(n_iter))

print('학습 레이블 데이터 분포:\n', label_train.value_counts())

print('검증 레이블 데이터 분포:\n', label_test.value_counts())

- 교차 검증 시마다 3개의 fold 세트로 만들어지는 학습 label과 검증 label이 완전히 다른 값으로 추출됨

- 이런 유형으로 교차 검증 데이터 세트를 분할하면 검증 예측 정확도는 0이 될 수밖에 없음

- 동일한 데이터 분할을

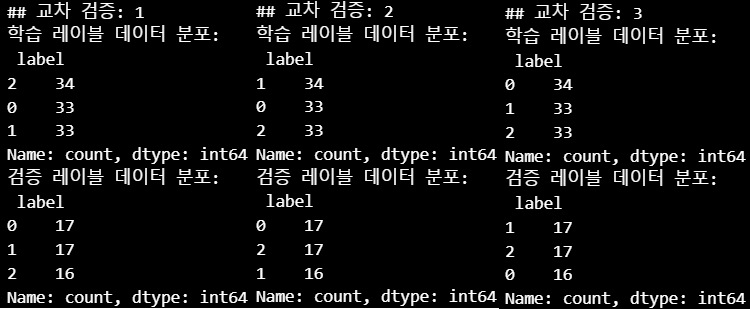

StratifiedKFold로 수행하고 학습/검증 label 데이터의 분포도 확인

StratifiedKFold를 사용하는 방법은 KFold를 사용하는 방법과 거의 비슷하지만, 단 하나 큰 차이는StratifiedKFold는 label 데이터 분포도에 따라 학습/검증 데이터를 나누기 때문에split()메서드에 인자로 feature 데이터 세트뿐만 아니라 label 데이터 세트도 반드시 필요!

from sklearn.model_selection import StratifiedKFold

skf = StratifiedKFold(n_splits=3)

n_iter=0

for train_index, test_index in skf.split(iris_df, iris_df['label']):

n_iter += 1

label_train= iris_df['label'].iloc[train_index]

label_test= iris_df['label'].iloc[test_index]

print('## 교차 검증: {0}'.format(n_iter))

print('학습 레이블 데이터 분포:\n', label_train.value_counts())

print('검증 레이블 데이터 분포:\n', label_test.value_counts())

- 출력 결과를 보면 학습 label과 검증 label 데이터 값의 분포도가 거의 동일하게 할당됐음을 알 수 있음

- 이렇게 분할이 되어야 label 값 0, 1, 2를 모두 학습할 수 있고, 이에 기반해 검증을 수행할 수 있음

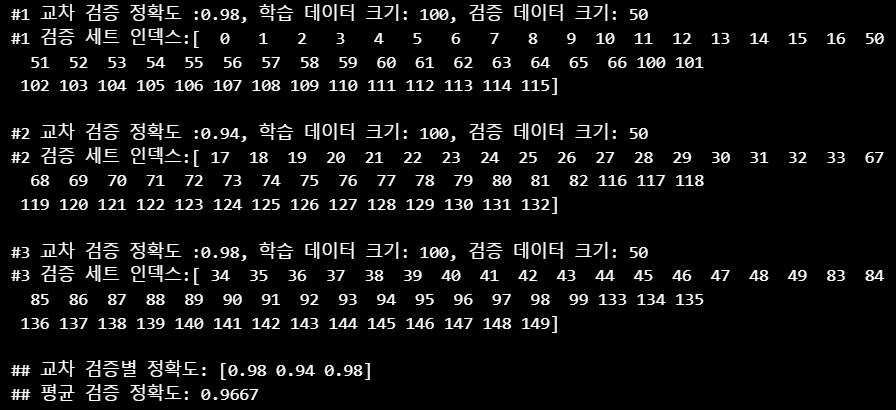

StratifiedKFold를 이용해 붓꽃 데이터 교차 검증

dt_clf = DecisionTreeClassifier(random_state=156)

skfold = StratifiedKFold(n_splits=3)

n_iter=0

cv_accuracy=[]

# StratifiedKFold의 split( ) 호출시 반드시 레이블 데이터 셋도 추가 입력 필요

for train_index, test_index in skfold.split(features, label):

# split( )으로 반환된 인덱스를 이용하여 학습용, 검증용 테스트 데이터 추출

X_train, X_test = features[train_index], features[test_index]

y_train, y_test = label[train_index], label[test_index]

#학습 및 예측

dt_clf.fit(X_train, y_train)

pred = dt_clf.predict(X_test)

# 반복 시 마다 정확도 측정

n_iter += 1

accuracy = np.round(accuracy_score(y_test,pred), 4)

train_size = X_train.shape[0]

test_size = X_test.shape[0]

print('\n#{0} 교차 검증 정확도 :{1}, 학습 데이터 크기: {2}, 검증 데이터 크기: {3}'

.format(n_iter, accuracy, train_size, test_size))

print('#{0} 검증 세트 인덱스:{1}'.format(n_iter,test_index))

cv_accuracy.append(accuracy)

# 교차 검증별 정확도 및 평균 정확도 계산

print('\n## 교차 검증별 정확도:', np.round(cv_accuracy, 4))

print('## 평균 검증 정확도:', np.round(np.mean(cv_accuracy), 4))

Note

Stratified K Fold의 경우 원본 데이터의 label 분포도 특성을 반영한 학습 및 검증 데이터 세트를 만들 수 있으므로 왜곡된 label 데이터 세트에서는 반드시Stratified K Fold를 이용해 교차 검증해야 함!

c. 교차 검증을 보다 간편하게 - cross_val_score()

- 사이킷런은 교차 검증을 좀 더 편리하게 수행할 수 있게 해주는 API 제공

KFold로 데이터를 학습하고 예측 하는 코드 순서-

- fold 세트를 설정

-

- for 루프에서 반복으로 학습 및 테스트 데이터의 인덱스를 추출

-

- 반복적으로 학습과 예측을 수행하고 예측 성능 반환

-

cross_val_score()는 이런 일련의 과정을 한꺼번에 수행해주는 API

cross_val_score()

cross_val_score(estimator, X, y=None, scoring=None, cv=None, n_jobs=1, verbose=0, fit_params=None, pre_dispatch='2*n_jobs')

- 주요 parameter : estimator, X, y, scoring, cv

estimator:Classifier클래스 orRegressor클래스를 의미X: feature 데이터 세트y: label 데이터 세트scoring: 예측 성능 평가 지표cv: 교차 검증 fold 수

KFold객체나StratifiedKFold객체를 입력할 수도 있음- 반환 값은

scoring파라미터로 지정된 성능 지표 측정값을 배열 형태로 반환- 즉,

classifier가 입력되면Stratified K fold방식으로 label 값의 분포에 따라 학습/테스트 세트 분할 (회귀인 경우는Stratified K fold방식으로 분할 할 수 없으므로K fold방식으로 분할)

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import cross_val_score , cross_validate

from sklearn.datasets import load_iris

iris_data = load_iris()

dt_clf = DecisionTreeClassifier(random_state=156)

data = iris_data.data

label = iris_data.target

# 성능 지표는 정확도(accuracy) , 교차 검증 세트는 3개

scores = cross_val_score(dt_clf , data , label , scoring='accuracy',cv=3)

print('교차 검증별 정확도:',np.round(scores, 4))

print('평균 검증 정확도:', np.round(np.mean(scores), 4))

>>> 교차 검증별 정확도: [0.98 0.94 0.98]

평균 검증 정확도: 0.9667cross_val_score()API는 내부에서 Estimator를 학습(fit), 예측(predict), 평가(evaluation)시켜주므로 간단하게 교차 검증을 수행할 수 있음!- cv 파라미터에 정수값(fold 수)를 입력하면 내부적으로

StratifiedKFold를 이용 - 비슷한 API로

cross_validate()존재- 여러 개의 평가 지표 반환 가능

- 학습 데이터에 대한 성능 평가 지표와 수행 시간도 같이 제공

3. GridSearchCV - 교차 검증과 최적 하이퍼 파라미터 튜닝을 한 번에

Note

하이퍼 파라미터 (Hyperparameter)

- 모델을 학습하기 전에 사용자가 직접 설정하는 값으로, 이 값을 조정해 알고리즘의 예측 성능 개선

GridSearchCV

- 사이킷런에서 제공하는 하이퍼 파라미터 최적화 API

Classifier나Regressor와 같은 알고리즘에 사용되는 하이퍼 파라미터를 순차적으로 입력하면서 편리하게 최적의 파라미터를 도출할 수 있는 방안 제공- Grid는 격자라는 뜻으로, 촘촘하게 파라미터를 입력하면서 테스트를 하는 방식

- 결정 트리 알고리즘의 여러 하이퍼 파라미터를 순차적으로 변경하면서 최고 성능을 가지는 파라미터 조합을 찾고자 한다면

- 다음과 같이 파라미터의 집합을 만들고 이를 순차적으로 적용하면서 최적화 수행 가능

grid_parameters = {'max_depth': [1, 2, 3],

'min_samples_split': [2, 3]}GridSearchCV는 교차 검증을 기반으로 이 하이퍼 파라미터의 최적값을 찾게 해줌!-

- 데이터 세트를 cross-validation을 위한 학습/테스트 세트로 자동으로 분할

-

- 하이퍼 파라미터 grid에 기술된 모든 파라미터를 순차적으로 적용해 최적의 파라미터를 찾을 수 있게 해줌

-

- 단, 동시에 순차적으로 파라미터를 테스트하므로 수행시간이 상대적으로 오래 걸리는 단점 존재!

- 위의 경우 CV가 3회라면 CV 3회 x 6개 파라미터 조합 = 18회의 학습/평가 이루어짐

GridSearchCV클래스

estimator: classifier, regressor, pipeline이 사용될 수 있음param_grid: key + 리스트 값을 갖는 딕셔너리가 주어짐 (estimator의 튜닝을 위해 파라미터명과 사용될 여러 파라미터 값 지정)scoring: 예측 성능을 측정할 평가 방법 지정, 보통은 사이킷런의 성능 평가 지표를 지정하는 문자열(ex:‘accuracy’)로 지정하나 별도의 성능 평가 지표 함수도 지정할 수 있음cv: 교차 검증을 위해 분할되는 학습/테스트 세트의 개수 지정refit: default=True이며 True로 생성 시 가장 최적의 하이퍼 파라미터를 찾은 뒤 입력된estimator객체를 해당 하이퍼 파라미터로 재학습

<예제> 결정 트리 알고리즘의 여러 가지 최적화 파라미터를 순차적으로 적용해 붓꽃 데이터를 예측 분석하는 데

GridSearchCV이용

from sklearn.datasets import load_iris

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import GridSearchCV

# 데이터를 로딩하고 학습데이타와 테스트 데이터 분리

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(iris_data.data, iris_data.target,

test_size=0.2, random_state=121)

dtree = DecisionTreeClassifier()

### parameter 들을 dictionary 형태로 설정

parameters = {'max_depth':[1,2,3], 'min_samples_split':[2,3]}train_test_split()을 이용해 학습 데이터와 테스트 데이터를 먼저 분리- 테스트할 하이퍼 파라미터들을 dictionary 형태로 설정

import pandas as pd

# param_grid의 하이퍼 파라미터들을 3개의 train, test set fold 로 나누어서 테스트 수행 설정.

### refit=True 가 default 임. True이면 가장 좋은 파라미터 설정으로 재 학습 시킴.

grid_dtree = GridSearchCV(dtree, param_grid=parameters, cv=3, refit=True)

# 붓꽃 Train 데이터로 param_grid의 하이퍼 파라미터들을 순차적으로 학습/평가 .

grid_dtree.fit(X_train, y_train)

# GridSearchCV 결과 추출하여 DataFrame으로 변환

scores_df = pd.DataFrame(grid_dtree.cv_results_)

scores_df[['params', 'mean_test_score', 'rank_test_score',

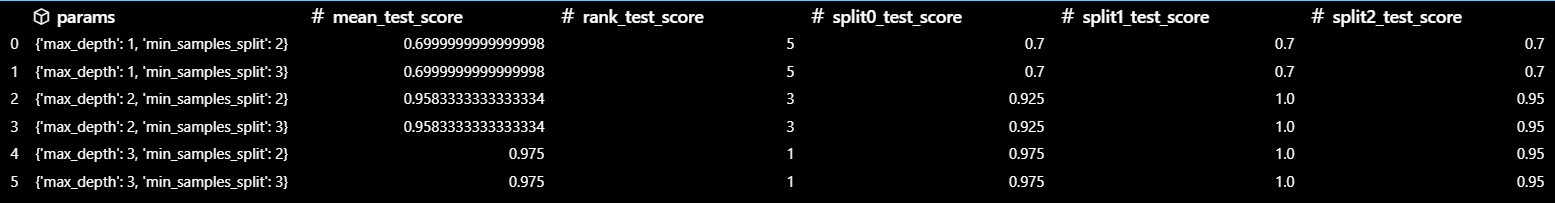

'split0_test_score', 'split1_test_score', 'split2_test_score']]- 학습 데이터 세트를

GridSearchCV객체의fit()메서드에 인자로 입력 - 학습 데이터를

cv에 기술된 폴딩 세트로 분할해param_grid에 기술된 하이퍼 파라미터를 순차적으로 변경하면서 학습/평가를 수행하고 그 결과를cv_results_속성에 기록

<결과>

params컬럼에는 수행할 때마다 적용된 개별 하이퍼 파라미터값을 나타냄rank_test_score는 하이퍼 파라미터별로d 성능이 좋은 score 순위를 나타냄 (1이 가장 뛰어난 순위이며 이때의 파라미터가 최적의 하이퍼 파라미터)mean_test_score는 개별 하이퍼 파라미터별로 CV의 폴딩 테스트 세트에 대해 총 수행한 평가 평균값

print('GridSearchCV 최적 파라미터:', grid_dtree.best_params_)

print('GridSearchCV 최고 정확도: {0:.4f}'.format(grid_dtree.best_score_))

GridSearchCV객체의fit()을 수행하면 최고 성능을 나타낸 하이퍼 파라미터의 값과 그때의 평가 결과 값이 각각best_params_,best_score_속성에 기록

# GridSearchCV의 refit으로 이미 학습이 된 estimator 반환

estimator = grid_dtree.best_estimator_

# GridSearchCV의 best_estimator_는 이미 최적 하이퍼 파라미터로 학습이 됨

pred = estimator.predict(X_test)

print('테스트 데이터 세트 정확도: {0:.4f}'.format(accuracy_score(y_test,pred)))

>>> 테스트 데이터 세트 정확도: 0.9667refit=True이면GridSearchCV가 최적 성능을 나타내는 하이퍼 파라미터로Estimator를 다시 학습해best_estimator_로 저장refit=False인 경우 최적의 모델(best_estimator_)을 자동으로 다시 학습하지 않음!- 즉,

best_estimator_속성이 존재하지 않으며, 오직 교차 검증 결과(cv_results_)만 제공됨

- 이미 학습된

best_estimator_를 이용해 테스트 데이터 세트로 정확도를 측정한 결과 약 96.67%의 결과 도출

Tip

학습 데이터를

GridSearchCV를 이용해 최적 하이퍼 파라미터 튜닝을 수행한 뒤에 별도의 테스트 세트에서 이를 평가하는 것이 일반적인 머신러닝 모델 적용 방법